Best Practices for Financial Modeling in a Business Case

A credible business case doesn't start with math; it starts with context. First align on the executive metric the C‑suite already agrees is urgent and important (the impact metric), then connect it to symptoms people see day‑to‑day, and finally to a canary metric that exposes the underlying behavior to change. This through‑line — Impact → Symptom → Canary — keeps the model honest and makes the numbers defensible.

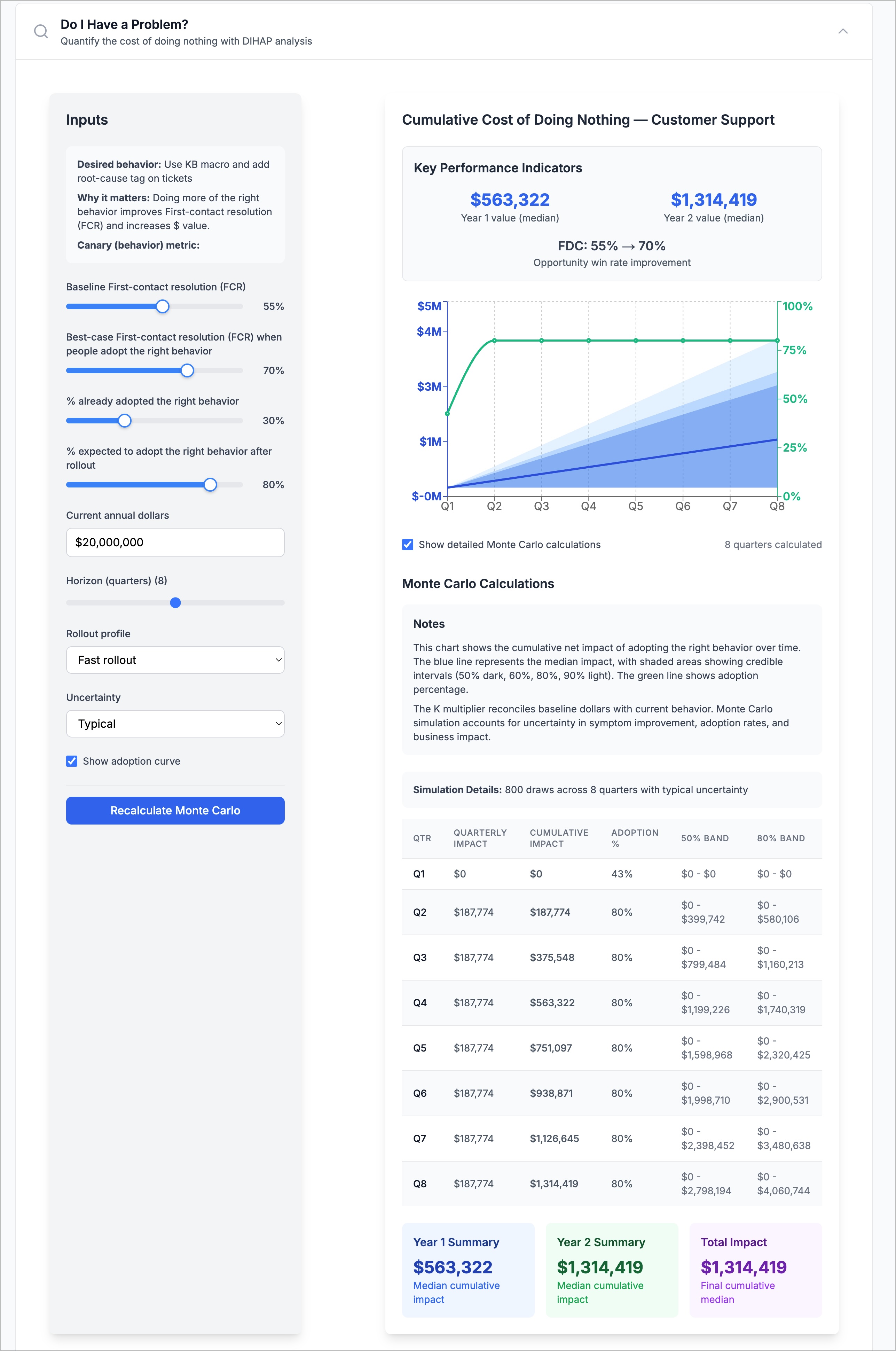

To keep stakeholders engaged, show an example before any equation. For instance: If more tickets are resolved on the first contact, overall support cost falls. Once the principle lands, bring in the math.

---

Summary: 10 Common Scenarios, Their Math Mode, and Useful Canaries

| Scenario | Math Mode | Symptom (what improves) | Example Canaries (leading indicators) |

|---|---|---|---|

| SaaS new‑logo sales | Rate | Opportunity win rate | % late‑stage opps with approved business case |

| Hardware channel sales | Rate | Partner conversion rate | % partner quotes with demo + reference |

| Manufacturing (discrete) | Rate | First‑pass yield | % CTQ stations with SPC + error‑proofing |

| Field service uptime | Hybrid | % incidents resolved within SLA | % work orders triaged & kit confirmed |

| Customer support | Rate | First‑contact resolution (FCR) | % tickets with KB macro + root‑cause tag |

| HR recruiting | Rate | Offer acceptance rate | % reqs using structured process |

| HR retention | Rate | 90‑day retention | % new hires with 7/30/60 check‑ins |

| Finance cash | Rate | % invoices collected ≤30 days | % invoices e‑invoiced & 3‑way matched |

| Logistics delivery | Rate | On‑time in‑full (OTIF) | % loads with dock lock + scan‑to‑load |

| IT operations | Hybrid | % P1 incidents restored <60 min | % incidents with runbook & rollback |

Why canaries? A canary is the causal behavior that separates "problem present" from "problem fixed." It must be measurable, familiar, and ideally sourced inside the buyer's org.

Click to view full size

---

The Variables (map them to the screenshot)

- S₀: Current symptom rate (baseline).

- S₁: Target symptom rate when the behavior is done right.

- a₀ / a₁: Current/target adoption of the behavior.

- M₀: Annual baseline dollars affected by the problem.

- Rollout & Uncertainty: How adoption ramps and how wide you expect the actuals to vary.

Derived variables

- K: The scaling factor tying rates to money.

- S(t), K(t): Time‑varying versions as adoption and scale change.

- a_eff(t): Effective adoption, adjusted for proficiency/learning.

---

Start with Examples, Then Show the Equations

Example (Support FCR): If baseline FCR is 55% and teams that use the new workflow achieve 70%, moving effective adoption toward 80% increases resolved‑on‑first‑touch, lowering handle time and escalations, which lowers cost. The same pattern generalizes to sales win rate, manufacturing yield, and OTIF.

The foundational scaling constant

A single constant connects money to rates:

Interpretation: today's annual cost \(M_0\) is "caused" by a weighted mix of two states — people already doing it right at \(S_1\) and everyone else at \(S_0\). \(K\) scales the rate into dollars, so once \(K\) is known you can project quarters by changing adoption and/or scale.

Three math modes cover ~90% of cases

- Rate Mode (execution improves, scale constant)

$$M_t = S(t) \times \frac{K}{4}$$

Use when the footprint (number of cases, opportunities, units) is steady but behavior improves — e.g., higher FCR, better win rate, better FPY.

- Scale Mode (scale changes, execution constant)

$$M_t = S_0 \times \frac{K(t)}{4}$$

Use when the core behavior is stable but the volume grows — e.g., pipeline expansion or throughput scaling.

- Hybrid Mode (both improve)

$$M_t = S(t) \times \frac{K(t)}{4}$$

Use when adoption improves execution while the operation scales — common in field service and IT operations.

> Best practice: Always begin with the rate‑mode story; it's the simplest to validate with today's canary and symptom. If scale also changes, layer in \(K(t)\) explicitly so reviewers can see each effect independently.

---

Adoption Curves: Model behavior change realistically

Behavior rarely jumps to 80% in one quarter. Use a logistic S‑curve and separate "raw adoption" from "effective adoption," which accounts for proficiency:

Where proficiency follows a normalized logistic curve (slow → fast → slow). This keeps early quarters conservative and avoids over‑crediting change before teams are competent.

> Best practice: Expose time‑to‑value, ramp duration, proficiencies, and ceilings as explicit parameters; clamp rates to realistic bounds (e.g., adoption ≤95%) to prevent impossible gains.

---

Monte Carlo: Communicate risk the CFO respects

Deterministic point estimates hide risk. Run many draws with uncertainty set to None, Narrow, Typical, or Wide to generate credible intervals around quarterly and cumulative impact. Report the median (p50) and show 50–80% bands so decision‑makers see upside and downside.

At a high level, vary the target rate \(S_1\), target adoption \(a_1\), and (if applicable) scale \(K\) around their means using a reasonable distribution, then recompute the quarterly impacts to get quantiles and bands.

> Best practice: Use tighter CVs when the canary is well‑measured and behavior is under managerial control; use wider CVs for messy, multi‑team changes. Name the uncertainty choice in the deck.

---

Canary → Impact: Keep the thread visible

A strong model traces a single line: If the canary improves, the symptom improves, and here's what that's worth. Document the canary definition, provide a baseline "normal," and encourage buyers to replace placeholder values with their own data.

For example, if deals with a business case have meaningfully higher win rates, the impact math asks: What if every deal won like the "with business case" cohort? Start with typical placeholders; then switch to the buyer's numbers.

---

Comparing "Do Nothing," DIY, and Vendor

Every case should compare three options with the same lens:

- Benefit = Impact × Risk (the probability the fix works in practice).

- Costs = People + tools + change costs over time.

- Net = Benefit − Cost, shown per quarter and cumulatively.

Use recognizable benchmarks, get explicit sign‑off on risk percentages, and show timelines; the "Do Nothing" line should equal the modeled cost of impact.

> Best practice: Put the CFO view up front: one slide with the impact driver, the risked benefit, the costs, and the median + bands from your Monte Carlo. Keep the mechanics in the appendix but make every assumption visible and swappable by the buyer.

---

Putting it Together

- Set baseline \(S_0\), target \(S_1\), current \(a_0\), target \(a_1\), and M_0\).

- Compute K from today's mix of right‑vs‑wrong behavior:

$$K=\frac{M_0}{a_0S_1+(1-a_0)S_0}$$

- Pick Rate, Scale, or Hybrid mode; map quarters with adoption and proficiency.

- Run Monte Carlo at the right uncertainty level; present median and bands.

- Compare Do Nothing, DIY, Vendor with risked benefits and full costs over time.

When a business case follows these practices—context first, examples before equations, canary‑to‑impact logic, adoption realism, and quantified uncertainty—stakeholders can say "yes" with confidence because the model reads like their business, uses their numbers, and makes risks explicit.

Related Articles

Happy Ears → Hard Signals: Govern Deals with a Canary

Replace opinions with an auditable Canary, govern with a short cadence, and prove value on a steady frame so decisions move fast.

Design a Business Case the CFO Will Actually Read

CROs and RevOps leaders don't win approvals by dazzling people with slides; they win by making decisions obvious, safe, and fast.

Beat Do-Nothing (& Straw-Man DIY) Without Drama

Treat Do Nothing and DIY fairly on a steady frame, use buyer-owned math, and ask for the smallest, safest step to win approvals without theatrics.